December 19, 2025

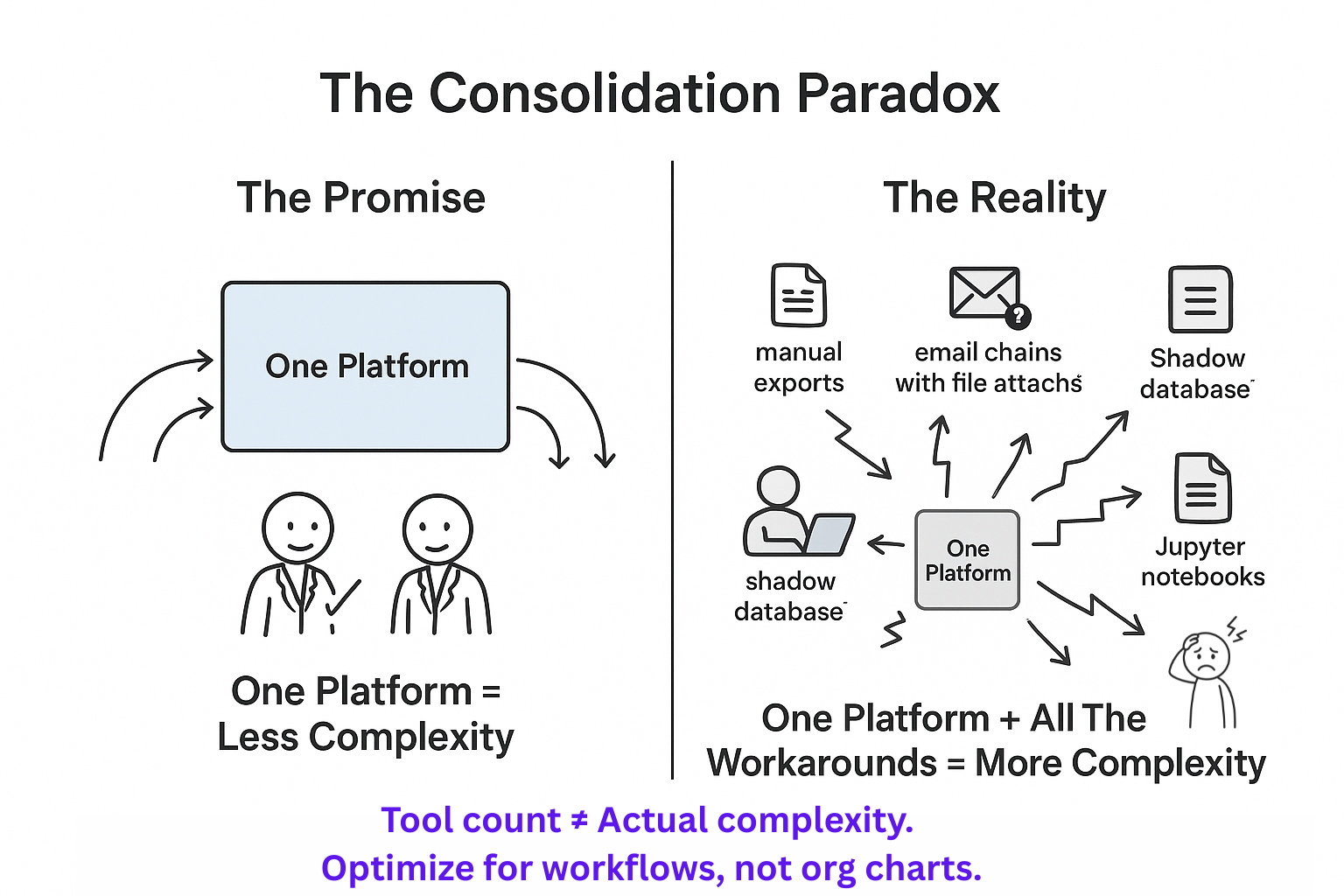

The Tool Consolidation Paradox: Why Fewer Tools Often Means More Complexity

“We’re spending too much money on tools. Let’s consolidate everything into one platform.”

I’ve watched this play out at multiple biotech companies. Leadership launches a consolidation initiative - one enterprise platform, unified access, cleaner org chart. It sounds logical on paper.

Then the problems start.

At one company, scientists panicked when they heard through the grapevine that Excel was being eliminated. IT had to issue a clarification, but the damage was done - scientists didn’t trust the process.

At another, the people who actually used the tools daily were never meaningfully consulted. Leadership talked to vendors, not to the scientists who’d need to change their workflows.

The pattern I keep seeing: change management budgets are a fraction of what’s needed, timelines assume instant behavior change, and initiatives get quietly scaled back when reality hits.

The expensive platforms become expensive reminders: you can’t consolidate your way out of complexity you don’t understand.

What Actually Happens

When you force consolidation without understanding workflows:

Scientists develop workarounds - Excel exports, email file shares, shadow databases. Your “unified platform” becomes something people route around.

Analysis time increases - Ten-minute tasks in specialized tools now take an hour. You’ve traded tool complexity for workflow complexity.

Technical debt migrates - Instead of maintaining tool integrations, you’re maintaining workarounds and manual transfers. The debt didn’t disappear - it became invisible.

The Better Approach

Strategic integration beats forced consolidation:

- Keep specialized tools scientists actually use

- Build API integrations for automatic data movement

- Implement unified authentication

- Centralize metadata that works across tools

- Standardize output formats for interoperability

This optimizes for the work, not the vendor list.

When Consolidation IS Right

Sometimes consolidation makes sense:

True redundancy - Three teams bought different tools for identical workflows

Not actually specialized - Generic visualization that could happen in your main platform

Training overhead exceeds benefits - Learning the tool takes longer than using it

Integration costs exceed consolidation costs - Sometimes this is genuinely true

Before You Consolidate

Ask these questions:

What workflows actually change? Not features - day-to-day scientist behavior

Who gets consulted? Talk to tool users before vendor demos

What’s the real change management cost? Training at scale isn’t cheap

What’s the realistic timeline? Organizational change is slow

Who’s optimizing what? Vendor management or scientific productivity?

The companies that manage tool sprawl successfully don’t do it through consolidation mandates. They build strategic integration architecture - central data warehouses, standardized APIs, clear governance, self-service frameworks.

This lets scientists use the right tool for each job while maintaining data consistency.

What Complexity Actually Means

Tool count is a proxy metric. What you actually care about:

- How long to answer a scientific question?

- How much manual data movement?

- How often do scientists hit technical friction?

- How much knowledge is locked in individual tools?

Optimize for those. Sometimes fewer tools. Usually better integration.

The best infrastructure is invisible to scientists and makes both teams successful.

What tool decisions has your team faced? How did you balance consolidation vs integration?